What do we mean by AI?

As an engineer, I focus on definitions so I will talk about Generative AI and the broader range of technologies that enable computers to simulate human intelligence and problem-solving capabilities. We’ve worked on three categories at Lighthouse:

Predictive AI

- Forecasts future outcomes

- Works best with structured, mostly numerical data

- The “black box” can be unpacked and thus understood, to some extent

- Data heavy, mostly numerical outcomes

- Examples: occupancy prediction

Generative AI

- Creates content (e.g., images, videos, music, or text)

- Works best with unstructured, ideally text or image data

- Fully “black box” (at least today, it’s basically impossible to explain why the output is what it is)

- Produces creative outputs that mimic human-like patterns

- Examples: summarizing a long article

Automation

- Automates well-defined human processes

- Doesn’t require data, but needs detailed process description and programming

- Fully understandable and easy to adjust

- Performs exactly as programmed

- Examples: automating the gathering of multiple data sources into one Excel file

Automation is sometimes referred to as AI, yet it’s not AI from a technical perspective. It doesn’t “learn” from data patterns. I’ll focus on the first two types of solutions and how we approach building them at Lighthouse.

AI: buy or build?

Should you have an AI / Data Science team, of should you outsource? Should you build the infrastructure to support AI developments in house, or pay for an “out of the box” business solution?

For us, the answer is somewhere in between.

We’re not yet large enough to develop “foundation models” or advance groundbreaking research in-house. We don’t want to build physical computing clusters or data centres, nor do we want to build the AI supporting infrastructure from scratch – as these things require massive capital and time investment and are way more convenient to buy.

We use Google Cloud Platform, specifically services like Google Cloud Storage, Spanner and BigQuery for data storage and processing, and Google Kubernetes Engine for deploying AI models, allowing us to have a scalable and secure way to store and process data, but also giving us access to the cutting-edge AI technology.

As a data-first company with unique data assets relevant to solving real-world problems in hospitality, we need people to continuously work with that data. Growing our own Data Science department was an easy decision.

What were the most important aspects of building a team?

Hire the right people

Our department includes experts from diverse backgrounds: from economics to theoretical physics, with experiences spanning business, academia, and industries like telecommunications, food, finance, and taxi services.

What I value the most in building a team is the breadth of different perspectives. We find a “culture add,” not only a “culture fit”. This allows the team to deliver high-quality solutions by challenging each other’s ideas.

Create a culture of learning

We spend a lot of time exchanging ideas and following industry news and academia. It’s crucial to build an R&D organisation where people feel it’s ok to spend time on learning to bring better solutions.

We also realise that AI is an evolving field making it impossible to keep up while continuing with a job of researching new product features.

We occasionally partner with Google and external Google certified AI experts, which helps us access a wider range of expertise than we could if we solely relied on internal teams. It’s a “learning curve booster”, so that we can learn things from them from the start.

Embrace innovation, and accept that things can fail

Innovation seems to be an easy sell. It often goes like this: “Hi! My team wants to run this innovative project. It’s a great idea – if it works it will bring a lot of revenue.”

However, everyone tends to forget the “if” and expects an innovation project to fit the mould of a standard project – one with a defined timeline and outcome.

That goes against the nature of data science and innovation. Building this environment for your team is easier said than done. Here is how we have helped reinforce this idea.

Innovation roadmap

Lighthouse has had a Data Science team since almost the beginning. We have researched and developed (Predictive) AI driven solutions for multiple problems presented by our customers.

Let me showcase a few examples where we successfully delivered using predictive AI:

- Market Insight Demand – first in class “nowcast” using forward looking data. Not only relying on historical trends, it shows abrupt market changes and tells users what to focus on today.

- Smart compset – an AI model to choose the most relevant competitors set for our customers. It’s used in Rate Insight, Market Insight and Benchmark Insight.

- Occupancy forecast – prediction model competing with the best players in the market.

- Price recommendations – recommendation system used in the Pricing Manager accounting for vast amounts of data to advise our customers the best price point.

There were also many research projects that failed to deliver the expected outcome – a natural part of the innovation process.

Seemingly out of nowhere, ChatGPT was released in Q4 2022, gaining 1 million users in 5 days. People were convinced that Generative AI would solve everything, all white-collar jobs would be automated, and Data Scientists would become obsolete.

Fortunately for me, it didn’t turn out this way. We learned about the limitations of Large Language Models (LLMs). Things like the cost, scalability challenges and most accuracy issues caused by (often hilarious) hallucinations.

We learned that GenAI is another tool in our AI toolbox – great for working with text and image data, but for other tasks it’s not working as well as Predictive AI or even automation.

Now, to the main point of this article: how did we learn all of this at Lighthouse?

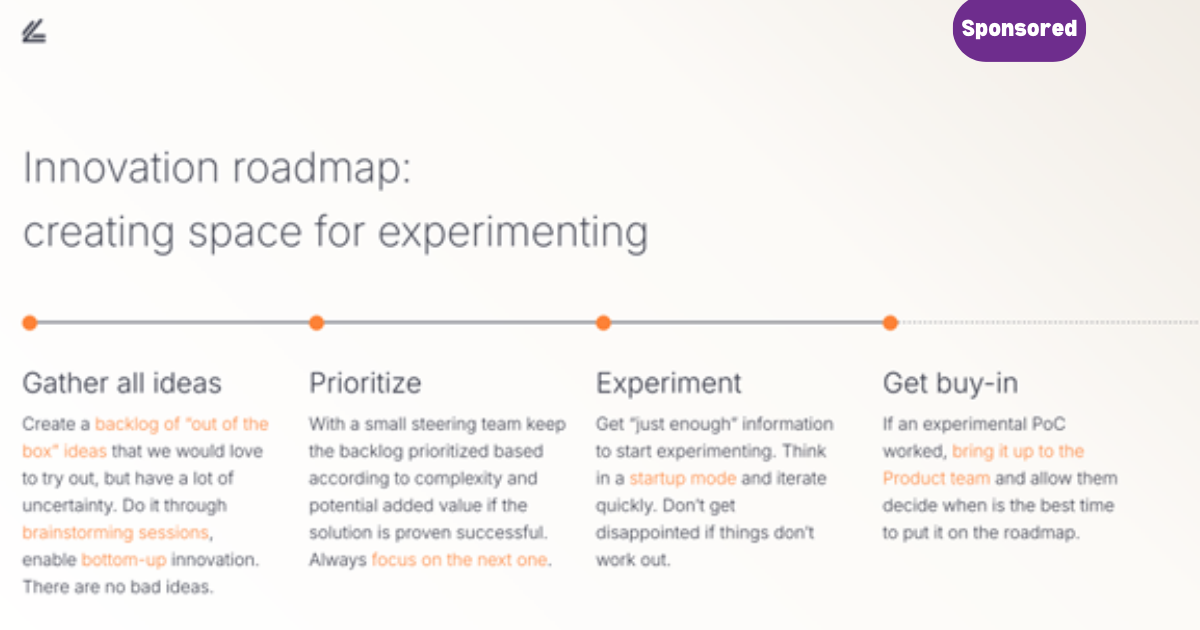

Our innovation roadmap approach

We invested more in experimentation and created an “Innovation roadmap”.

We categorise our data science projects into three types:

- Projects with predictable scope and outcome – we know exactly what we need, and we know which technology will get us there as we have worked on similar projects.

- Projects with unpredictable scope and predictable outcome – we know something will work, but there is uncertainty on the solutions or data.

- Projects with unpredictable scope and outcome – we don’t know if something will work nor how long it will take us to know if we have the right tools.

It’s the last category that we designate as part of our “Innovation roadmap”.

Our innovation roadmap is instrumental in driving bottom-up innovation. When we have ideas, but lack the knowledge to research them, we occasionally partner with Google’s Certified AI Experts, who help navigate the complex landscape of AI.

One of the first projects that we tackled this way was a proof of concept for “Smart Summaries”. In this project we boosted the progress by partnering with Google’s certified AI experts to learn how to best build it. More about this in another of my post’s here.

Key takeaways

- Choose the right strategic partner: This allows your company to stay at the forefront of AI advancements while focusing on core, industry-specific strengths.

- Build a strong, diverse team: Diverse perspectives ensure different views and opinions, leading to better solutions.

- Embrace innovation and its risks: Implement strategies that help your business and stakeholders give your team the latitude to fail and learn.

To learn more about how Lighthouse can help you with AI, click here.