How to Fight Hallucination !

Episode 2 of my AI Series for Hoteliers

I believe that understanding the concepts behind the scenes is key to facing the AI wave. Although it might sound like it, this isn’t a blog about Scott Joplin’s superb composition! 😉

Rather, RAG (Retrieval Augmented Generation) is a technical concept everyone needs to know.

Let’s dive in!

Imagine a Hotel Chatbot where a guest asks: “At what time can I jump into the water?”

At what time can i jump into the water ?

You and I immediately understand what this means, even though we all agree it’s a rather roundabout way to ask for the pool opening hours. But with guests, anything is possible! So how do we approach understanding such a sentence?

The Traditional Computer Approach

A traditional computer approach would simply look up words in a list. However, this would fail because the word “swimming pool” doesn’t appear in the sentence.

While a complex system could search for synonyms, it would inevitably miss some variations.

The AI Approach: How “Water” Relates to “Pool”

How does AI understand such a sentence? It does so by translating likelihood into spatial proximity.

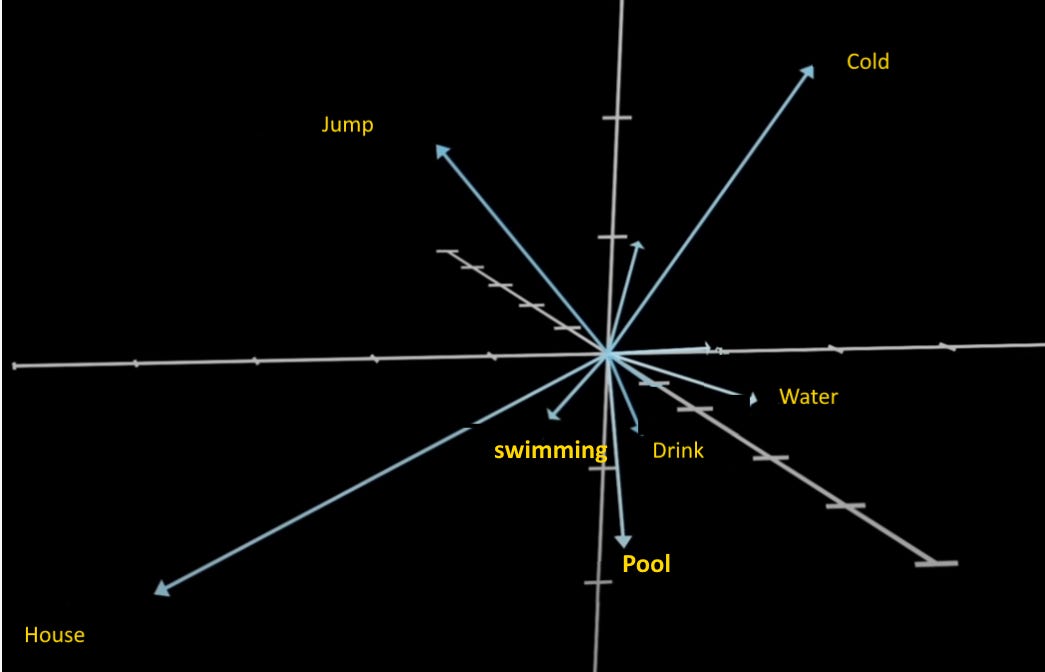

You might think in one or two dimensions, where “water” and “pool” are semantically close. But by collecting massive amounts of data and understanding context (I’ll discuss Transformers in a future article), thousands of dimensions are built. The likelihood is determined by distance…

I honestly don’t know how to represent more than three dimensions, but here’s how words relate to each other in our guest’s request: We call these relationships “Vectors” because that’s how they’re represented in space.

Practical Applications in the Hotel

If you let an LLM (Large Language Model) answer questions like “What time is checkout?” or “What is the guest reward program policy?” without guidance, it will inevitably provide inaccurate answers. Just becaus it will try hard to give a plausible answer…

Imagine the AI telling your guest he can leave at 5pm 😉

RAG at play

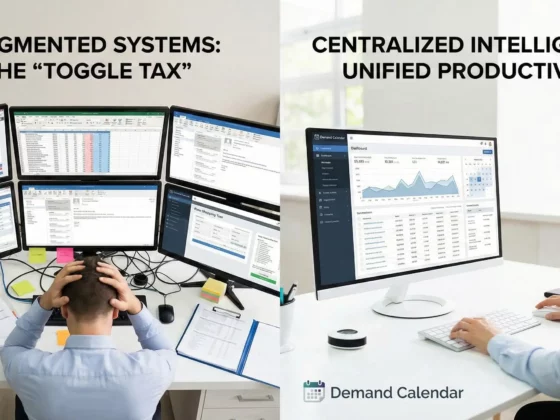

To combat hallucination, we can submit our documents (PDF, texts, words…) to the LLM so it can build this visual representation of word distances WITHIN its model.

This allows us to force the LLM to reference our actual data:

-

HR departments can submit their PDFs containing company policies and procedures

-

Chat applications can access your hotel’s specific information, like opening hours, services, and policies

-

Customer service can rely on up-to-date product documentation and FAQs

This is where RAG becomes crucial. By retrieving relevant information from your actual documents and augmenting the AI’s responses with this data, you ensure accuracy while maintaining the AI’s natural language understanding capabilities. It’s like giving the AI a personalized knowledge base for your hotel.

Remember, while AI is powerful, it needs to be grounded in your actual business data to be truly useful. RAG is the bridge between AI’s language capabilities and your hotel’s specific information.

In the next episode, we’ll explore Transformers and how they’ve revolutionized AI’s ability to understand context. Stay tuned!

Perplexity.ai

I’d like to introduce you to a tool i now user everyday : www.perplexity.ai

It is essentially my google replacement !

While google is simply looking for existing content, perplexity will allow to mix them to produce a much better answer to non trivial questions.

Try it !