OpenAI released yet another add-on on to its growing suite of AI tools: DeepResearch. The product, which shares its name with Google Gemini’s Deep Research tool, also does near the exact same thing. For a given research question it will formulate a research plan and consult a variety of sources to provide a compelling research brief.

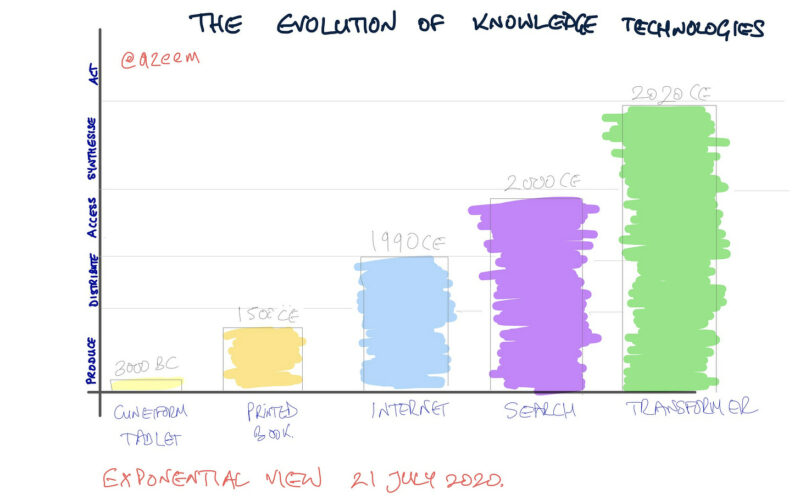

DeepResearch is a milestone in how we access and manipulate knowledge. When GPT-3 was first made available a few years ago, it quickly became clear that these LLM-based tools would create a new paradigm for accessing information. In A Short History of Knowledge Technologies, I argued:

[GPT-3] does something the search engine doesn’t do, but which we want it to do. It is capable of synthesising the information and presenting it in a near usable form.

Four years is an eternity in AI, of course. GPT-4 is much more powerful than GPT-3 and the new family of reasoning models, o1 and o3, are in a class of their own. DeepResearch from OpenAI goes much further than my short quote suggested.

I have run several queries through DeepResearch. Each time I pass a request to DeepResearch it evaluates it and, like a good researcher, asks for clarifications. In one of these, I asked it to research the comparative environmental costs — from energy, water, waste, and emissions — of a range of mainstream activities.

Once I have responded to the question, DeepResearch disappeared off to do the work. In this case, the bot worked for 73 minutes and consulted more than 29 sources. The output was a table covering 11 different activities with six different dimensions of environmental impact. The full text is 1,900 words, excluding the dozens of footnote hyperlinks.

For 73 minutes’ work, this is excellent. I certainly could not have done this in an hour. For a researcher receiving this output, it would form a strong basis to push further, probably assisted by other AI tools to produce a more detailed and robust outcome.

This was a pretty basic data gathering query. And readers of Exponential View will benefit from it in a future essay in which I compare the environmental load of ChatGPT and other tools to mainstream leisure activities. (Let’s just say Disney World makes data centres look like paragons of sustainability.)

But I have dug deeper with DeepResearch. LLMs are not mere stochastic parrots, as was once argued. Their abilities to find more-abstract relationships between words, potentially teasing out conceptual connections, goes beyond simple steroidal autocorrect.

And this conceptual capability coupled with the primitive reasoning that models such as o1 and o3 offer, means we can do far more sophisticated analysis. One example I tried was an evaluation of the impact of DeepSeek on the AI sector, including geopolitical ramifications. In this case, I asked DeepResearch to also consider ‘What if’ scenarios. The final 7,946 word report took 12 minutes.

I was impressed. The report was well-structured. Each section followed a sensible, logical approach. Initially, it presented a baseline of agreed facts before moving into further analysis. Each section concluded with two or three scenarios, as the tool provided a “what if” analysis.

The quality? I would equate the output with what a strategy consulting team could produce in two or three days working flat out. Certainly, if I were going to create something with that level of depth, it would have taken more than a couple of days. There are limitations: it may be prone to a bit of simplism, but nothing a little editing couldn’t fix. You can read it in full below and judge for yourself.